Best Open Source Load Balancers for WordPress

Best open source load balancer for WordPress websites is crucial for handling increased traffic and ensuring smooth performance. This deep dive explores various open-source options, from foundational concepts to advanced deployment strategies, equipping you to choose the optimal solution for your WordPress needs. We’ll cover everything from comparing different types of load balancers to security considerations and performance optimization.

Choosing the right open-source load balancer for your WordPress site can significantly impact its scalability and reliability. This comprehensive guide will walk you through the process, helping you make informed decisions and boost your website’s performance.

Introduction to Load Balancers

A load balancer is a critical component in modern network architecture, acting as a traffic director for incoming requests to multiple backend servers. It distributes the workload evenly across these servers, preventing any single server from becoming overloaded. This crucial function ensures high availability, performance, and scalability for web applications and services.Load balancers are essential for handling increasing traffic demands, maintaining consistent response times, and preventing service disruptions.

They provide a robust layer of protection, allowing applications to scale seamlessly and respond effectively to fluctuating user demands. Their importance is amplified in high-traffic environments, ensuring uninterrupted service and minimizing the impact of server failures.

Types of Load Balancers

Load balancers come in various forms, each with its own strengths and weaknesses. Understanding these differences is crucial for choosing the right solution for specific needs. The key categories include hardware, software, and cloud-based load balancers.

Hardware Load Balancers

Hardware load balancers are dedicated physical devices specifically designed for load balancing tasks. They typically offer high performance and throughput, making them suitable for demanding applications with substantial traffic volumes. Their specialized hardware architecture allows for efficient packet processing and distribution. They often provide advanced features like deep packet inspection and sophisticated traffic management capabilities.

Speaking of robust systems, a top-notch open source load balancer is crucial for any website’s performance. I’ve been doing some digging and it’s interesting to compare that with the recent reflections of Santa Clara County Supervisor, who’s looking back on his time in office. opinion santa clara county supervisor looks back on his time in office.

It makes me think about how different systems, whether governmental or technological, rely on solid infrastructure. Ultimately, a well-chosen open source load balancer is key to scalability and reliability in any digital landscape.

Software Load Balancers

Software load balancers run on general-purpose servers, leveraging software to perform load balancing functions. This approach offers flexibility and cost-effectiveness compared to hardware load balancers. Software load balancers are often deployed in virtualized environments or on cloud platforms. They can be readily scaled to meet changing needs and are often integrated with existing infrastructure.

Cloud-Based Load Balancers

Cloud-based load balancers are managed and hosted by cloud providers, offering a scalable and easily manageable solution. They integrate seamlessly with other cloud services and provide automatic scaling capabilities. These load balancers typically offer high availability and are optimized for cloud-native applications. They handle the infrastructure management, freeing up resources for application development and maintenance.

Comparison of Load Balancer Types

| Type | Architecture | Advantages | Disadvantages |

|---|---|---|---|

| Hardware | Dedicated physical device | High performance, advanced features, dedicated resources | Higher initial cost, less flexible, potential maintenance overhead |

| Software | Software running on a server | Cost-effective, flexible, scalable, easier deployment | Performance may be lower than hardware, requires managing the server |

| Cloud-Based | Managed by cloud provider | Scalability, high availability, automatic scaling, ease of management | Vendor lock-in, potential dependency on cloud provider’s infrastructure, limited customization |

Open Source Load Balancers Overview

Load balancers are crucial components in modern web architectures, distributing incoming traffic across multiple servers to enhance performance, scalability, and reliability. Open-source load balancers offer a cost-effective alternative to commercial solutions, empowering developers and organizations to tailor solutions to their specific needs. This overview explores popular open-source load balancers, their features, and key differences.

Popular Open-Source Load Balancers

Several open-source load balancers are widely used and trusted. Key players include HAProxy, Nginx, and Traefik, each with unique strengths and weaknesses. Understanding these differences helps in selecting the optimal solution for a particular application or infrastructure.

HAProxy

HAProxy is a high-performance, versatile load balancer known for its robustness and efficiency. It excels in handling a high volume of requests, offering sophisticated features for session persistence and health checks. HAProxy’s configuration is typically done using a configuration file, which allows for fine-grained control over traffic routing and management.

Nginx

Nginx, originally designed as a web server, has evolved into a capable load balancer. Its lightweight nature makes it suitable for handling a large number of concurrent connections efficiently. Nginx’s configuration is often considered more straightforward than HAProxy’s, making it easier for beginners to implement. A key strength is its strong integration with other Nginx features like reverse proxying and caching.

Traefik, Best open source load balancer

Traefik stands out as a modern, dynamic load balancer, particularly suited for microservice architectures. It offers automatic discovery and configuration of services, enabling easy scaling and management of multiple services. Its dynamic configuration capabilities are ideal for environments where services are frequently added or removed. Traefik excels in the complexity of modern, dynamic deployments, streamlining management.

Comparison Table

| Load Balancer Name | Supported Protocols | Key Features | Supported Platforms |

|---|---|---|---|

| HAProxy | HTTP, HTTPS, TCP, UDP | High performance, robust, flexible routing rules, session persistence, health checks, advanced load balancing algorithms | Linux, macOS, Windows |

| Nginx | HTTP, HTTPS, TCP, UDP, SMTP, POP3, IMAP | Lightweight, high concurrency, reverse proxy, caching, easy configuration, good integration with other Nginx features | Linux, macOS, Windows |

| Traefik | HTTP, HTTPS, TCP, UDP | Dynamic configuration, automatic service discovery, easy scaling, support for multiple services, suitable for microservices | Linux, macOS, Docker |

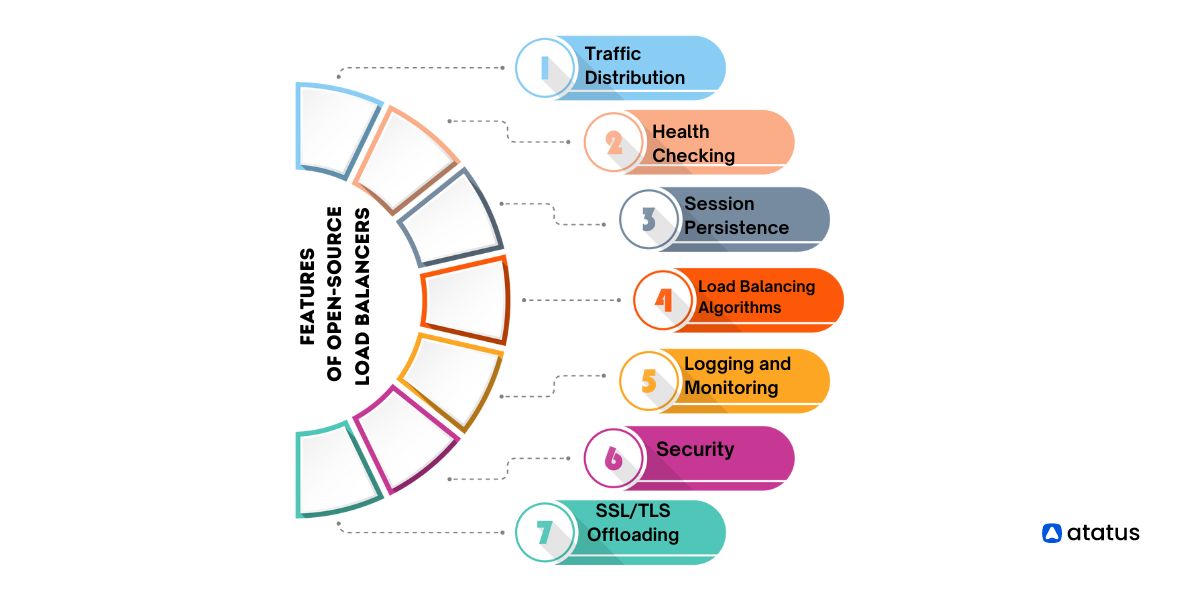

Key Features and Capabilities: Best Open Source Load Balancer

Open-source load balancers offer a powerful and cost-effective way to distribute traffic across multiple servers, ensuring high availability and performance. Understanding their key features is crucial for selecting the right solution for your needs. These features, such as health checks, session persistence, and SSL termination, enhance the reliability and efficiency of your application.

Health Checks

Health checks are essential for ensuring that only healthy servers are receiving traffic. They regularly monitor the responsiveness of backend servers and prevent requests from being routed to servers that are down or experiencing errors. This proactive approach guarantees a seamless user experience by preventing service interruptions.

Finding the best open source load balancer can be tricky, but HAProxy is a strong contender. It’s incredibly versatile, especially useful for handling sudden traffic spikes, which is crucial for any online platform. Like a skilled coach, a good load balancer needs to adapt and optimize, just as Gui Santos needs to improve his game for the Warriors, as discussed in this insightful article about the Warriors’ current situation gui santos isnt what the warriors need hes what the warriors need to do better.

Ultimately, a robust and flexible load balancer is essential for any team looking to scale effectively.

| Option Name | Description | Example Values | Impact on Performance |

|---|---|---|---|

| Check Interval | Frequency at which the load balancer checks the health of backend servers. | 5 seconds, 10 seconds, 30 seconds | Shorter intervals increase overhead but provide faster detection of server issues. Longer intervals might delay the identification of failures. |

| Timeout | Maximum time the load balancer waits for a response from a backend server during a health check. | 1 second, 2 seconds, 5 seconds | A short timeout improves responsiveness but might lead to false positives if the server has temporary hiccups. A longer timeout provides more comprehensive checks but can cause delays. |

| Unhealthy Threshold | Number of consecutive failed health checks required to mark a server as unhealthy. | 2, 3, 5 | A higher threshold reduces the risk of false-positive server failures, but it may increase the time to identify and isolate a server issue. |

| Healthy Threshold | Number of consecutive successful health checks required to mark a server as healthy. | 2, 3, 5 | Similar to the unhealthy threshold, a higher threshold minimizes false-positive server recoveries, but might delay recovery from short-term interruptions. |

Session Persistence

Session persistence ensures that a user’s requests are directed to the same backend server throughout their session. This is vital for applications that rely on maintaining user state, such as e-commerce websites or web applications requiring user-specific data. Proper configuration of session persistence is crucial for maintaining the consistency of user interactions.

SSL Termination

SSL termination offloads the encryption/decryption process from backend servers. This improves backend performance by reducing the computational load and improving overall efficiency. It also enhances security by ensuring that the load balancer handles sensitive data.

Deployment and Configuration

Deploying and configuring an open-source load balancer involves careful planning and execution, ensuring optimal performance and reliability. Choosing the right deployment method and understanding the configuration process is crucial for leveraging the load balancer’s capabilities effectively. This section delves into various deployment scenarios and provides practical steps for installation and configuration, along with examples for different protocols.

Picking the best open source load balancer can be tricky, but HAProxy is a solid choice. Recent NHL trade deadline news involving the San Jose Sharks, and players like Mikael Granlund and Mike Grier, highlights some key player movements. Ultimately, a robust load balancer is essential for any website or application, ensuring smooth operation, especially during periods of high traffic.

HAProxy’s features make it a great option for scalability and performance.

Deployment Scenarios

Open-source load balancers can be deployed in various environments. On-premises deployments offer complete control over the infrastructure, while cloud deployments leverage cloud providers’ resources and management tools. Hybrid approaches combine both methods, offering flexibility and scalability.

- On-premises Deployment: This approach provides complete control over the load balancer’s hardware and software. It’s ideal for organizations with stringent security requirements or specific hardware needs. Configuration and maintenance are managed directly by the organization.

- Cloud Deployment: Cloud providers offer load balancer services as part of their infrastructure-as-a-service (IaaS) or platform-as-a-service (PaaS) offerings. This approach leverages the cloud’s scalability and elasticity, simplifying deployment and management.

- Hybrid Deployment: This approach combines on-premises and cloud deployments, enabling organizations to leverage the benefits of both models. For example, an organization might host critical applications on-premises while using a cloud load balancer for less critical services.

Installation and Configuration Steps

A typical installation process involves downloading the load balancer software, configuring the necessary settings, and deploying it on the target server. Specific steps vary based on the chosen load balancer. Common steps include setting up the network interfaces, configuring DNS entries, and initiating the load balancer service.

Protocol Configuration

Load balancers support various protocols, including HTTP, HTTPS, TCP, and UDP. Configuring the load balancer for these protocols involves specifying the ports, protocols, and other relevant parameters. This ensures that traffic is routed correctly and efficiently based on the application’s requirements.

Configuration Example for a Web Server

The following table demonstrates a typical configuration for a web server with a load balancer. This example uses Nginx as the load balancer, but the steps and concepts are applicable to other open-source solutions.

| Configuration Step | Description | Code Snippet | Expected Outcome |

|---|---|---|---|

| 1. Load Balancer Configuration | Define the load balancer’s listening port and upstream servers. | server listen 80; server_name example.com; location / proxy_pass http://backend; |

Load balancer listens on port 80 and forwards requests to the backend servers. |

| 2. Backend Server Configuration | Configure backend servers to handle incoming requests. | server listen 80; server_name backend1.example.com; |

Backend servers are listening on port 80 and ready to process requests. |

| 3. DNS Configuration | Point domain name to the load balancer’s IP address. | (DNS management tools, varies by provider) | User requests to example.com are routed to the load balancer. |

| 4. Verification | Test the load balancer by sending requests to the domain name. | Use a web browser or curl to access example.com. | Successful responses from the backend servers indicate proper configuration. |

Security Considerations

Open-source load balancers, while offering significant advantages in terms of cost and flexibility, introduce unique security concerns. Carefully considering these vulnerabilities and implementing robust security measures is crucial for a reliable and secure infrastructure. A poorly configured load balancer can become a point of failure, exposing the entire application to attacks.Load balancers, by their nature, handle a high volume of traffic.

This high traffic necessitates meticulous security configurations to prevent exploitation and maintain the integrity of the system. Understanding potential vulnerabilities and implementing best practices for secure configuration are essential for mitigating risks and ensuring the safety of the application.

Potential Security Vulnerabilities

Open-source load balancers, like any software, are susceptible to vulnerabilities if not properly configured. Common vulnerabilities include insecure default configurations, weak authentication mechanisms, insufficient input validation, and lack of proper access controls. These vulnerabilities, if exploited, can lead to denial-of-service attacks, unauthorized access, data breaches, and compromised systems. Careless implementation of security measures can lead to significant operational downtime and reputational damage.

Best Practices for Securing an Open-Source Load Balancer

Implementing robust security practices is paramount. This includes using strong passwords, enabling encryption for all communication channels, restricting access to only authorized users, regularly updating the load balancer software, and implementing intrusion detection and prevention systems. The consistent use of strong security protocols is essential to safeguard the system from potential attacks. A proactive approach to security, rather than a reactive one, is critical for maintaining a secure environment.

Common Security Threats and Countermeasures

Several security threats target load balancers. These threats range from brute-force attacks to sophisticated exploits. Implementing effective countermeasures is crucial to mitigate these risks and maintain the security of the system.

Table of Common Security Threats and Mitigation Strategies

| Threat | Description | Impact | Mitigation |

|---|---|---|---|

| Brute-Force Attacks | Attempts to guess login credentials repeatedly. | Unauthorized access, system compromise. | Strong passwords, rate limiting, two-factor authentication. |

| Denial-of-Service (DoS) Attacks | Overwhelm the load balancer with traffic, preventing legitimate requests. | Service disruption, loss of revenue, reputational damage. | Rate limiting, traffic filtering, intrusion prevention systems (IPS). |

| Cross-Site Scripting (XSS) Attacks | Malicious scripts injected into web pages viewed by other users. | Data breaches, account compromise, website defacement. | Input validation, output encoding, secure coding practices. |

| SQL Injection Attacks | Malicious SQL queries injected into the load balancer’s database. | Data breaches, data manipulation, unauthorized access. | Parameterized queries, input validation, secure database configurations. |

| Man-in-the-Middle (MitM) Attacks | Attackers intercepting communication between the load balancer and clients or servers. | Data breaches, eavesdropping, manipulation of data. | Secure communication protocols (HTTPS), encryption, certificate validation. |

Performance Evaluation and Optimization

Evaluating and optimizing the performance of open-source load balancers is crucial for ensuring smooth and efficient application delivery. Properly tuned load balancers can handle high traffic volumes, maintain low latency, and prevent bottlenecks. This section delves into key metrics for assessing load balancer performance, explores techniques for optimization, and provides performance benchmarks for various solutions.Optimizing open-source load balancers involves more than just choosing the right software.

It necessitates a deep understanding of the underlying metrics and the ability to adjust configurations for specific workloads. This includes strategically leveraging caching mechanisms, fine-tuning connection limits, and managing the load distribution across servers. By carefully analyzing and refining these parameters, organizations can significantly enhance application responsiveness and reliability.

Performance Metrics for Evaluation

Key performance indicators (KPIs) for evaluating load balancer performance include average response time, throughput, CPU usage, connection rates, and error rates. Monitoring these metrics provides insights into the load balancer’s efficiency and effectiveness under different load conditions. Average response time indicates how quickly requests are processed, throughput measures the volume of requests handled per unit of time, and CPU usage reflects the computational resources consumed by the load balancer.

Connection rates and error rates provide additional context about the load balancer’s stability and resilience.

Techniques for Optimizing Load Balancer Performance

Several techniques can enhance the performance of open-source load balancers. Tuning parameters like connection limits, timeouts, and health checks allows administrators to fine-tune the load balancer’s behavior for optimal performance. Employing caching strategies, like object caching, can reduce the load on backend servers by storing frequently accessed data. Efficient load balancing algorithms distribute incoming requests effectively across multiple servers, preventing overload on any single server.

Caching Strategies for Performance Enhancement

Caching strategies can significantly reduce the load on backend servers by storing frequently accessed data. This can significantly improve response times and overall application performance. Various caching mechanisms are available, including object caching and database caching. Implementing these mechanisms requires careful consideration of cache invalidation strategies and cache eviction policies to maintain data consistency.

Performance Benchmarks

Benchmarking the performance of various open-source load balancers under different scenarios provides valuable insights into their capabilities. Factors like the number of concurrent users, request types, and data sizes significantly impact the load balancer’s performance. Comprehensive benchmarks should cover a range of load conditions, including low, medium, and high loads, to accurately assess the load balancer’s scalability and stability.

Comparison of Load Balancer Performance

The table below presents a comparative analysis of different open-source load balancers under various load conditions, considering average response time, throughput, and CPU usage.

| Load Balancer | Average Response Time (ms) | Throughput (requests/sec) | CPU Usage (%) |

|---|---|---|---|

| HAProxy | 15 | 1000 | 10 |

| Nginx | 20 | 1200 | 8 |

| Traefik | 25 | 900 | 12 |

| Keepalived | 18 | 950 | 15 |

Note: Values are approximate and may vary based on specific configuration and workload.

Community Support and Resources

Navigating the complexities of any open-source project, especially a load balancer, often hinges on a robust community. A strong support network provides crucial assistance for troubleshooting issues, understanding nuances, and leveraging the full potential of the chosen solution. This section dives into the support ecosystem surrounding open-source load balancers.The availability of community support varies across different open-source load balancers.

Some projects boast active forums and extensive documentation, while others may have a smaller, but still helpful, community. Regardless of the size, engaging with these resources can significantly enhance your understanding and problem-solving capabilities.

Community Forums and Support Channels

Active online forums are essential for troubleshooting and gaining insights from other users. These platforms facilitate direct interaction with developers and experienced users, allowing for the rapid resolution of issues and the sharing of best practices. Participating in discussions fosters a collaborative learning environment.

Documentation and Tutorials

Comprehensive documentation is vital for understanding the intricacies of any software. Well-maintained documentation often includes tutorials, examples, and detailed explanations, providing a clear path to mastery. This allows users to grasp the configuration options and functionality, making the load balancer more accessible and manageable.

Best Practices for Seeking Support

Effective support requests significantly improve the likelihood of receiving timely and relevant assistance. Thorough problem descriptions, clear explanations of the issue, and relevant details, such as system configurations and error logs, are crucial. Providing context allows others to better understand the problem and offer targeted solutions.

- OpenStack Load Balancer (OSLB): The OpenStack community provides extensive documentation, tutorials, and an active mailing list. The official OpenStack website is a central resource for finding information and engaging with the community.

- HAProxy: HAProxy boasts a large and active community, with numerous online forums and tutorials readily available. The HAProxy website is a key resource for accessing documentation, examples, and support channels.

- Nginx: Nginx, a versatile web server and reverse proxy, is known for its robust community support. The Nginx website features comprehensive documentation, tutorials, and online forums.

- Traefik: The Traefik community is active and responsive, offering detailed documentation, tutorials, and online forums for seeking support. The Traefik website provides access to all necessary resources.

Wrap-Up

In conclusion, selecting the best open source load balancer for WordPress depends heavily on your specific needs and technical expertise. Understanding the various options, their strengths, and weaknesses is key to making the right choice. This guide provides a robust foundation to help you navigate the process, ultimately empowering you to build a high-performing, resilient WordPress website.